CI/CD with Databricks and Azure DevOps

So you've created notebooks in your Databricks workspace, collaborated with your peers and now you're ready to operationalize your work. This is a simple process if you only need to copy to another folder within the same workspace. But what if you needed to separate your DEV and PROD?

Things get a little more complicated when it comes to automating code deployment; hence CI/CD.

What is CI/CD?

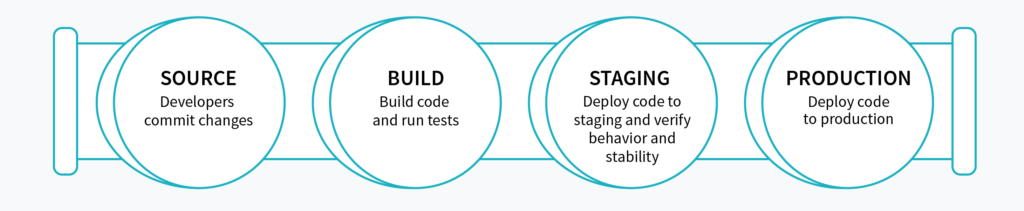

A CI/CD pipeline.

Continuous integration (CI) and continuous delivery (CD) embody a culture, set of operating principles, and collection of practices that enable application development teams to deliver code changes more frequently and reliably. The implementation is also known as the CI/CD pipeline and is one of the best practices for devops teams to implement.

Implementing CI/CD in Azure Databricks

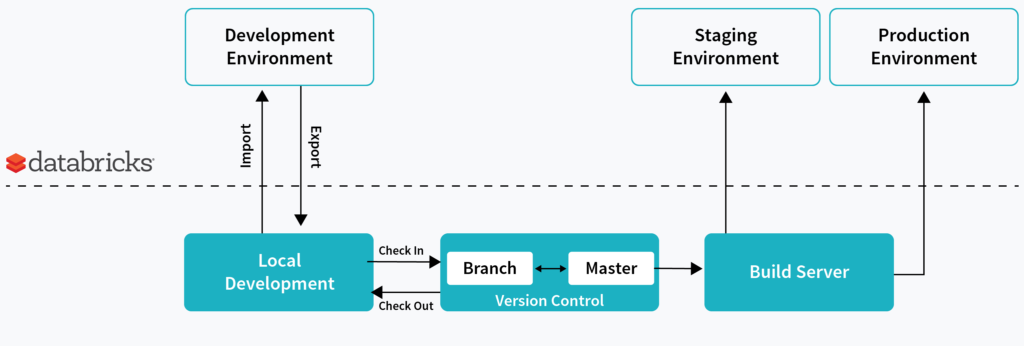

Example of continuous integration and delivery

The flow is simple:

- A developer develops

- Then he checks in his code into source control

- The developer branch is then pushed to the master branch

- The code is then pushed to various environment; DEV; TEST; PROD

For the purpose of this blog, I will demonstrate how a notebook committed to Azure DevOps can be automatically pushed to another branch (folder) of the same workspace.

Prerequisites

Make you you have the following :

| A Databricks workspace | You can follow these instructions if you need to create one. |

| An Azure DevOps project / Repo | See here on how to create a new Azure DevOps project and repository. |

| A Sample notebook we can use for our CI/CD example | This tutorial will guide you through creating a sample notebook if you need. |

Binding your DevOps Project

Next, you will need to configure your Azure Databricks workspace to use Azure DevOps which is explained here.

Syncing your notebooks a Git Repo

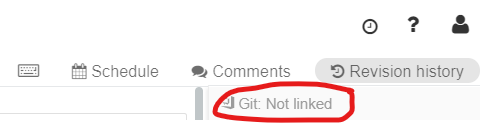

When you open your notebook, you will need to click on Revision history on the top right of the screen. By default, the notebook will not be linked to a git repo and this is normal.

Notebook not linked to a repo

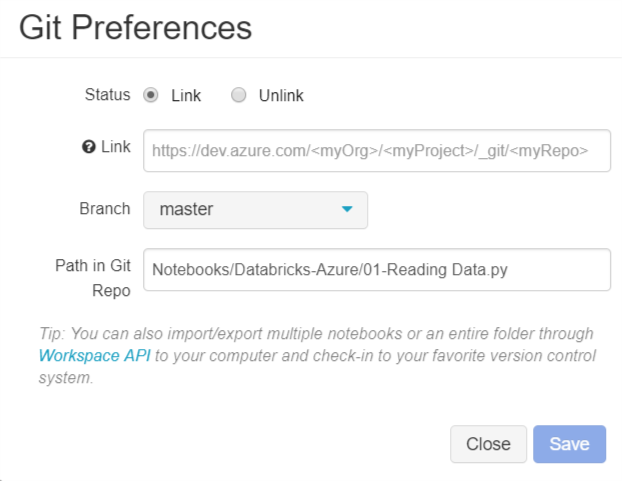

If you click on "Not linked", you will be asked a few things:

| Status | You will need to set this to "Link" |

| Link | Change the values encapsulated by <> with the appropriate information. |

| Branch | Leave this to master for now. But should follow your companies DevOps best practices |

| Path in Git Repo | Folder in Git where the notebook will be created |

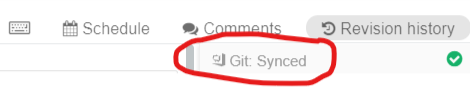

If done properly, you will see the "Git: Synced" status

Notebook linked to a Git repo

Once synced, you will need to save your changes to Git.

Building your CI / CD Pipeline in Azure DevOps

Now that you committed your notebook to Azure DevOps, it's time to build your CI/CD pipeline.

What is an Azure Pipelines? It's a fully featured continuous integration (CI) and continuous delivery (CD) service. It allows you to build, test and deploy your code to any platform. Add more parallel jobs for even faster pipelines. Build and deploy on Microsoft-hosted Linux, macOS and Windows.

Well be concentrating on build and release; leaving test for another blog :)

Creating a new build pipeline

A continuous integration trigger on a build pipeline indicates that the system should automatically queue a new build whenever a code change is committed. This is the CI portion of our process.

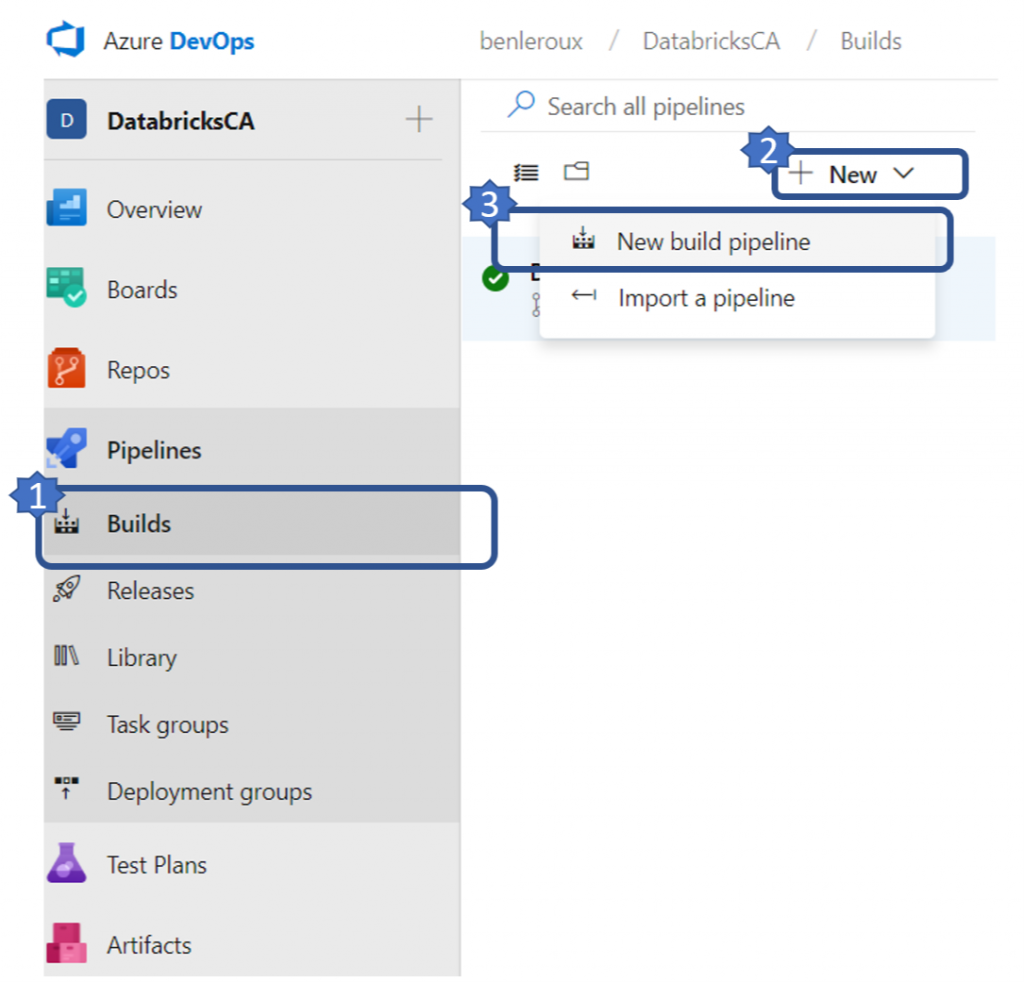

Selecting your project, you will be brought into the project summary where you will see various option on the tool bar on the right. The one that is important for us now is the Pipelines one. Clicking on "Pipelines" will reveal a sub menu and start off with build.

You will then need to click on "New" and then "new build pipeline".

Creating a new build pipeline

Doing so will bring you to the creation screen

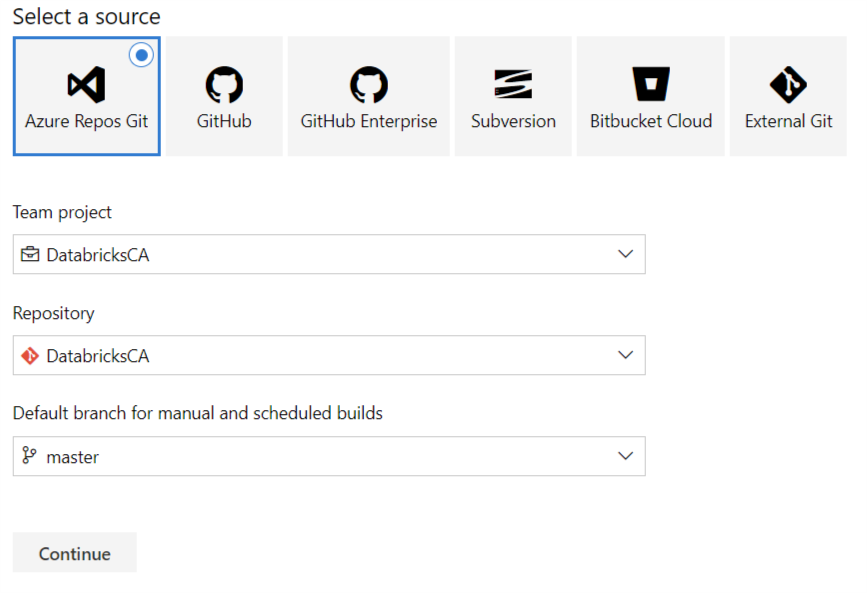

Create new build pipeline

| Source | Unless you want to / need to link to another source, keep the default to "Azure Repos Git" |

| Team Project | Pick the project you created |

| Repository | Pick the repository you created |

| Default branch | Keep "Master" as the default branch |

Pressing "Continue" will bring you to the template selection screen. The pipeline we're building involves pulling the changes from the master branch and building a drop artifact which will then be used to push to Azure Databricks in the CD part. Knowing this, pick "Empty job".

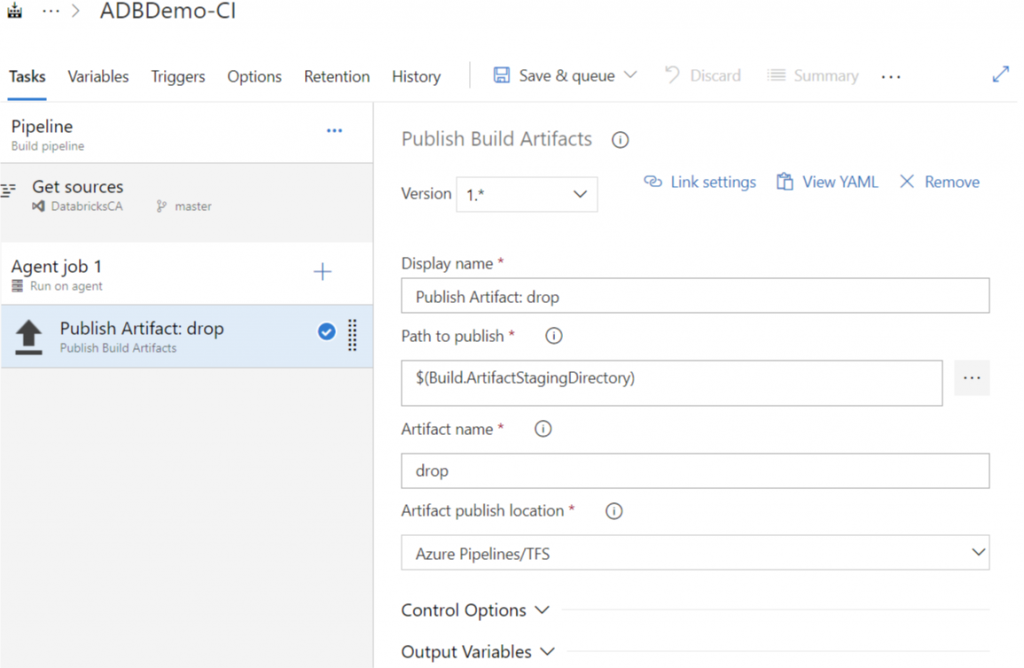

Name your build pipeline ADBDemo-CI and pick Hosted VS2017 pool.

Next, you will click on the "+" sign next to "Agent job 1" which will bring up the list of tasks available to you. Search and add "Publish Build Artifacts".

Once added, click on the added task and your screen should now look like this:

Adding a new task.

A few things of importance to note on this screen; the Path to publish and the Artifact name.

The task selected will pull artifacts from your Git repository and create a package which will be used by our release pipeline. The Path to publish indicates which folder in your git repository you would like to include in your build. Clicking on the ellipsis will let you browse your repository and pick a folder.

The Artifact name identifies the name of the package you will use in the release pipeline. You can keep it as "drop" for simplicity.

Queuing your build

Once you've defined your build pipeline, it's time to queue it so that it can be built. This is done by selecting the "Save & queue" or the "Queue" options. Doing so will ask you save and commit your changes to the build pipeline.

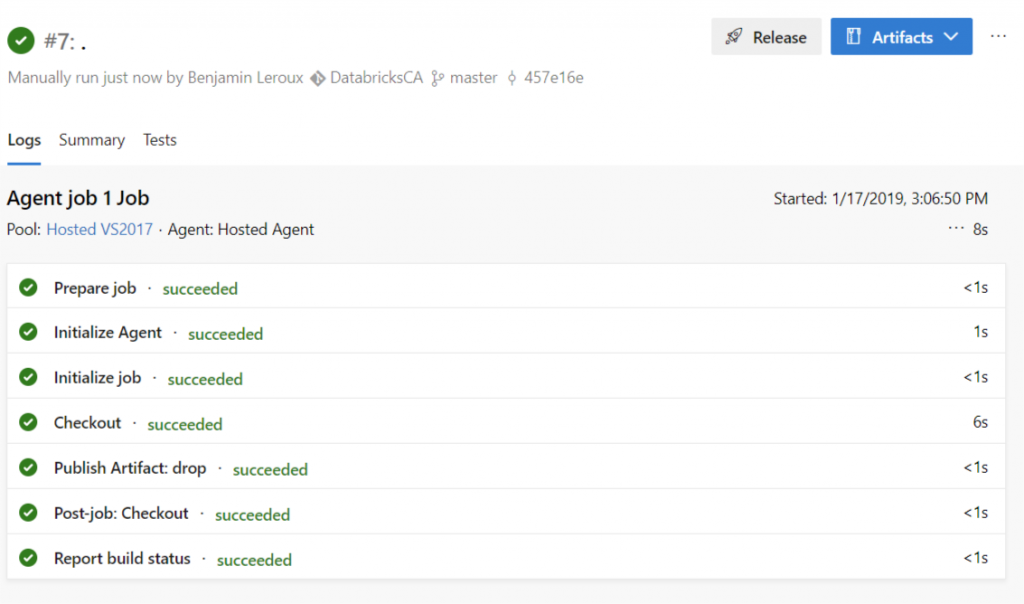

View build progress

After submitting your build to the queue, you can monitor the progress by clicking the # item on the top left as per the following

Once completed you should see green check marks on all the steps

Completed build.

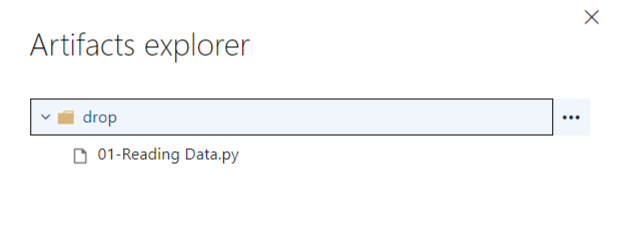

Viewing the content of the build

You can inspect the content of your build by clicking the "Artifact" blue button on the top right. Doing so will allow you the browse the content like so:

Artifact explorer

Building the release pipeline

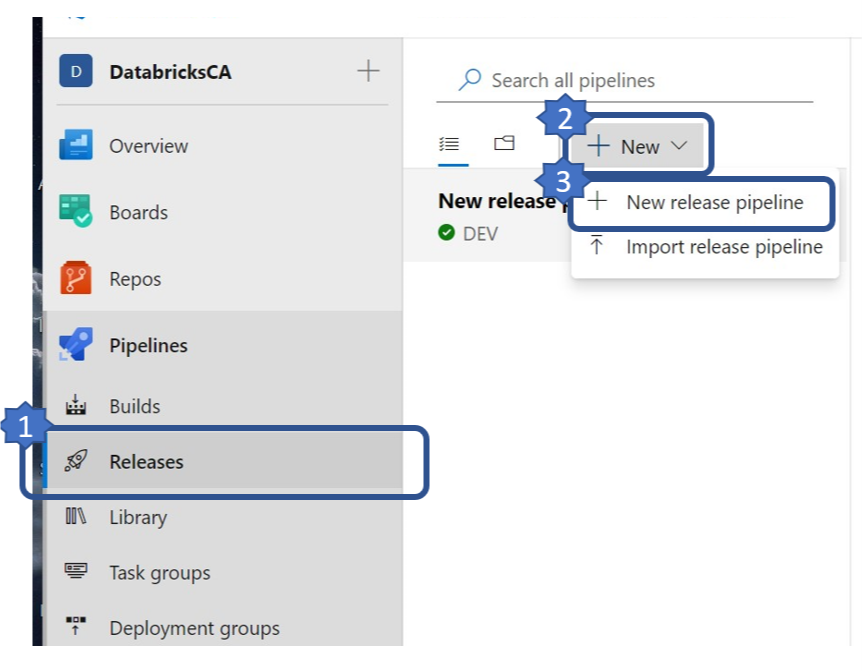

Now that we have a build created, lets setup the delivery portion of the CI/CD. In order to do this, you will go back to the "Pipelines" menu and select "Release" and then "new release pipeline".

Creating a new release pipeline.

Like before, you will select an empty template.

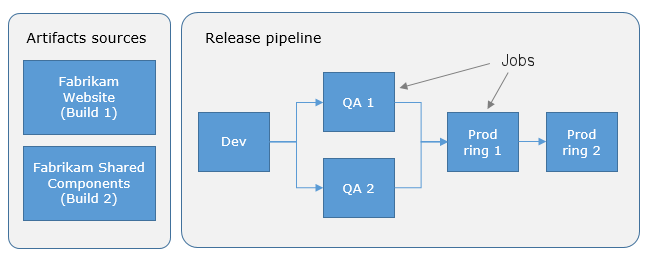

Once that is done, you will need to configure 2 sections; artifacts and stages. In the Microsoft documentation, an artifact is described as a deployable component of your application. It is typically produced through a Continuous Integration or a build pipeline and a stage is described as a logical and independent entity that represents where you want to deploy a release generated from a release pipeline.

Example of a release pipeline.

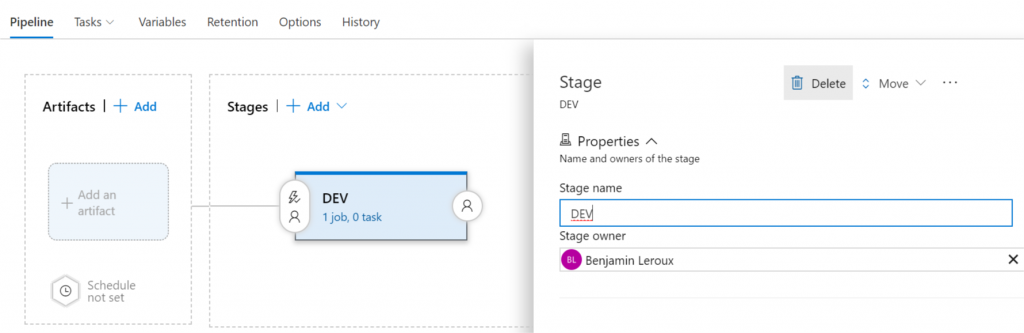

Again, for simplicity, we'll create 1 stage; let's call it DEV. If done right, your screen should look like this:

Setup up a new stage.

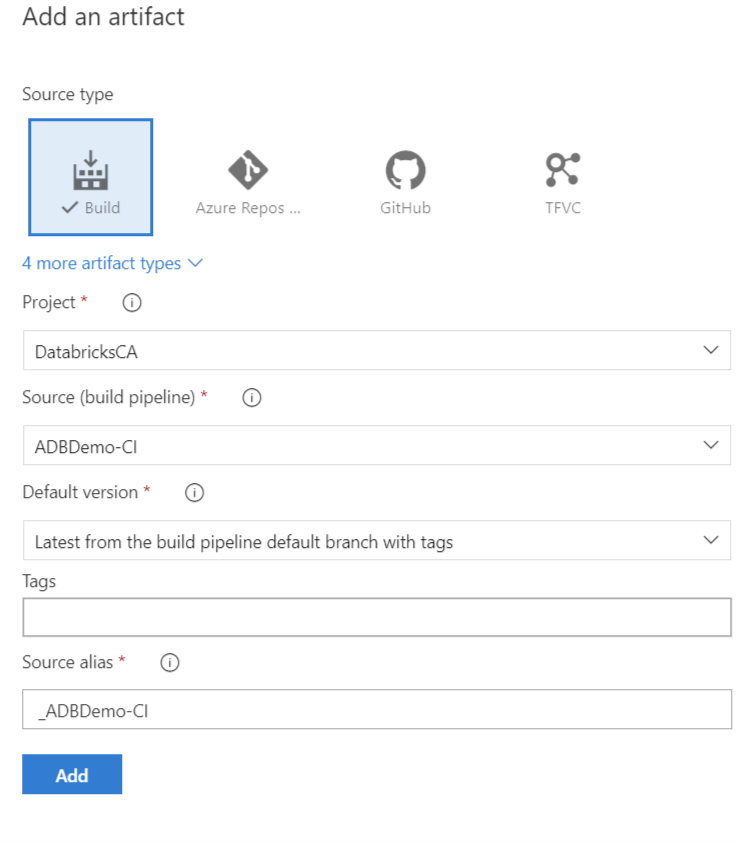

Configuring Artifact

Before configuring the stage, we need to specify the artifacts that will be used for this pipeline. This can be done by click "+ Add" in the artifact block and specifying the following:

Add new artifact.

| Source type | The type will be Build |

| Project | Select the project you created earlier |

| Source | Pick the name of the artifact build in the build pipeline. Should be ABDDemo-CI |

| Default version | Pick Latest from the build pipeline default branch with tags |

| Tags | leave blank |

| Source alias | A unique name to identify the artifact in the stage portion of this pipeline. Default of _ABDDEmo-CI should do. |

Click "Add"

Configuring Stage

Next, we'll need to add a task to your DEV stage. This can be done by clicking on the "1 job, 0 task" link in the DEV box and then the "+" sign next to "Agent job".

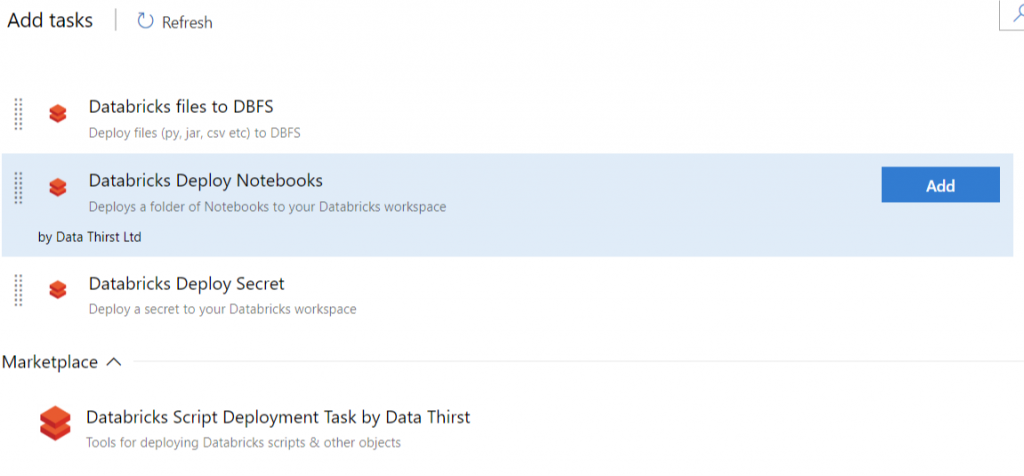

In the search box of the add task screen, search for Databricks and you should see a task available in the marketplace called "Databricks Script Deployment Task by Data Thirst". This tool will give you the option of deploying scripts, secrets and notebooks to Databricks. You can see here for more details on the tool.

Go ahead and click install.

Once done, you should see new tasks available to you. Select "Databricks Deploy Notebook" and click "Add"

Adding the Databricks task.

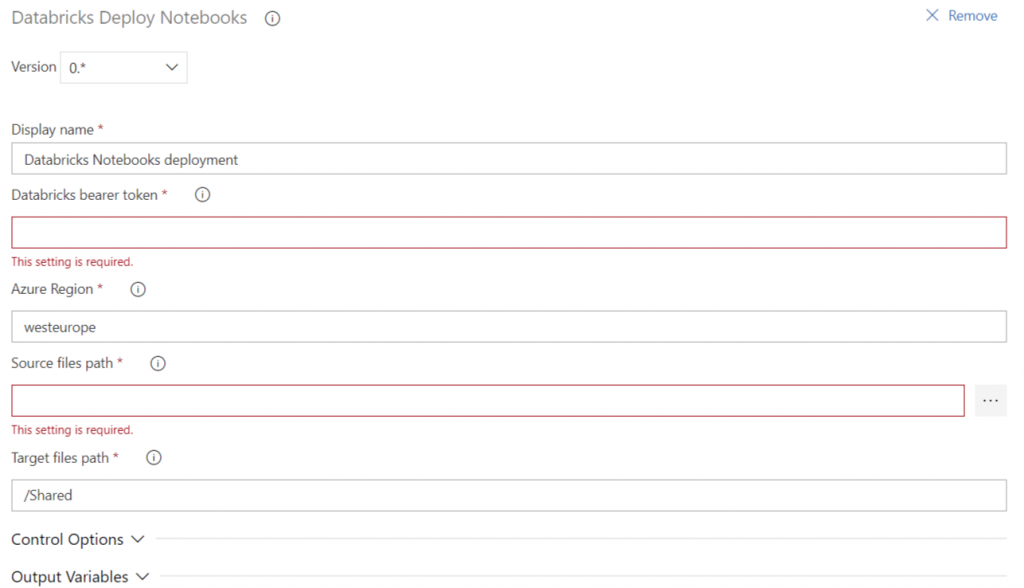

Now we need to configure the newly added task as per:

Configure Databricks Deploy Notebook task.

| Display name | Leave default name |

| Databricks bearer token | You will need to generate a new user token and paste it here. See this article on how to generate a user token. |

| Azure Region | You can grab that off your Databricks workspace URL. Example, mine is https://canadaeast.azuredatabricks.net/ |

| Source files path | Click on the ellipsis and browse your linked artifact and pick the folder you want pushed back to Databricks. I picked the "drop" folder |

| Target files path | Specify the folder in your Databricks workspace you want the notebook import to. |

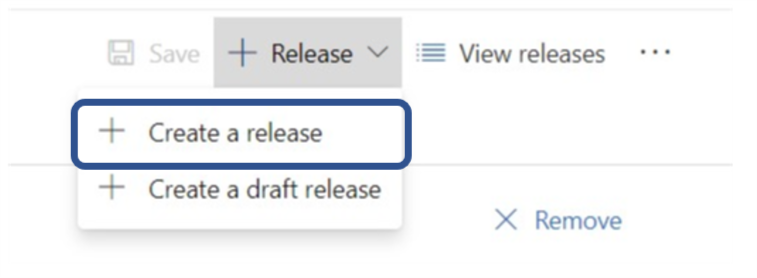

The final step is to create a release by clicking the "+ Release" drop down and select "Create a new release". Click "Create" on the next screen.

Creating the release

Creating a new release.

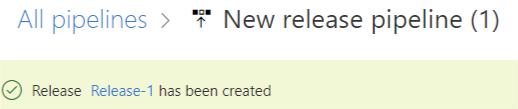

See progress.

Like before, click the "Release-#" link on the top left to see the progress.

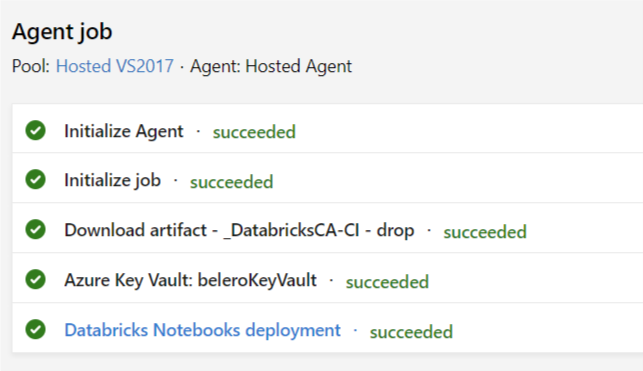

If you verify the logs and you release ran successfully, you should see green check marks all across the board. I strongly suggest you go through each steps and look at the outputs as it help understand what's going on behind the hood.

Successful execution.

All is done!

If all ran well, you should now see the notebook inside the "/Shared" folder of your Databricks workspace